RSA vs ETA Google Ads – 4 Month Test

When Google introduced Responsive Ads to all advertisers in July 2018, I saw it as an opportunity to simplify the workflow for a common optimization task performed by search marketers: ad copy testing. It has long been a best practice to run multiple variations of ad copy simultaneously, accrue data, and decide winners and losers based on your KPIs.

The simplest way to test out ads is what is called “A/B Testing,” where you have two versions, and “A” and a “B,” and you test them against each other.

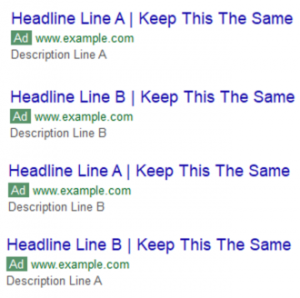

Another way to test ad copy is through multivariate testing, where, as the name implies, you test out multiple versions of ads, compare each version’s performance with a control, and decide winners and losers from the lot. This requires more setup and more total ads. As an example, if I wanted to test out two different ad headlines and two different ad description lines, I would end up with four different ads:

Throw in more Headlines and more Descriptions, and you’ll quickly find that you’re running 10, 12, 20 ads per ad group. Not an overwhelming task for a competent search marketer, especially with Excel and pivot tables, but laborious nonetheless.

And then comes the Responsive Search Ad. Responsive Search Ads (RSAs) gave us the ability to combine these 4, 10, 12, 20 unique ad variations into one ad unit. We can combine between 3-15 Headlines and 2-4 Descriptions into one ad unit, and let Google mix-and-match the ad entities using its machine learning algorithms to find the best performing combination of ad copy of the up to 43,000 variations. Google was even generous enough to let us keep some control over the combinations by “pinning” certain headlines, ensuring they only appear in the first headline slot (or second, or whichever – also applies to description lines).

Assuming Google’s algorithm works as advertised, RSAs would save marketers the time and effort of creating each individual ad combination, pulling the data into a pivot table, and analyzing the data to determine winners and losers. Time that can then be spent on more strategic planning, more testing, other campaign optimizations, etc.

But have RSA’s been effective at boosting performance?

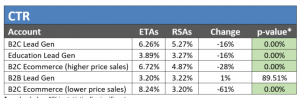

At Granular, we have adopted RSAs across many of our accounts, but we kept our Expanded Text Ads (ETAs) active as well (it’s hard for PPC marketers to give up total control). After four months, here is how RSAs have measured up against ETAs across some of our accounts:

*p-value below 5% is statistically significant

In every account except for the B2B Lead Gen account, CTR on RSAs were at least 16% worse than ETAs. While the B2B Lead Gen RSAs are off to a promising start, we still have a way to go before these results are statistically significant, so we can’t say for sure yet that RSAs are yielding a higher CTR (if you want to count +1% as “higher”).

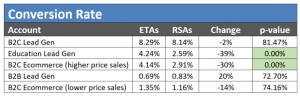

Conversion Rate shouldn’t be affected by the ad format, and for the most part our results indicate that, with three of the five accounts’ results still inconclusive. However, in the two accounts where we have reached statistical significance for conversion rate, RSAs have led to a 39% (Education Lead Gen) and 30% (B2C Ecommerce [higher price sales]) decrease in conversion rates. Again, this drop in conversion rate is unexpected, and it is worth pointing out that while we were testing out different ad formats in this study, the ad copy and landing pages remained largely the same across both ETAs and RSAs in all accounts (RSAs allowed us more text space to add a few more call-to-actions).

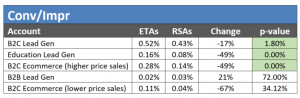

When evaluating CTR and conversion rate performance, I always try to keep in mind the combinative affect of the two on conversions and will examine changes in the conversion by impression rate. In any given test, conversion rate may go down overall but if CTR goes up by a larger factor, then this can lead to more conversions overall. Our results show that for each account that has reached statistical significance for this metric, RSAs have led to a significant decrease in conversions per impression, with Education Lead Gen and B2C Ecommerce (higher price sales) as much as 49% lower.

While the results for a few of the accounts’ metrics still need more data before we’ve reached statistical significance, each metric that has reached this mark has shown that Responsive Search Ads are having a negative impact on account performance.

It is rare that Google releases new features that do not help advertisers reach their goals. Afterall, when advertisers win, Google wins. But this appears to be one exception. One possible explanation for the decrease in CTR at least could have to do with RSAs functionality. As mentioned, RSAs will combine the list of Headline and Description text we give them and use machine learning to find the combinations that work the best (i.e. get the best CTR). It’s possible that Google’s machine learning algorithm just needs more time to find the optimal combinations. But advertisers and PPC managers often can’t afford to wait months, or quarters, for better results.