How I Cut CPCs By -40% In 2 Weeks With Expanded Text Ads

In his book Digital Marketing in and AI World, Frederick Vallaeys makes the point that there are some things machines can do better than humans and there are some things humans can do better than machines. It’s in using the combination of these strengths that we find the best results.

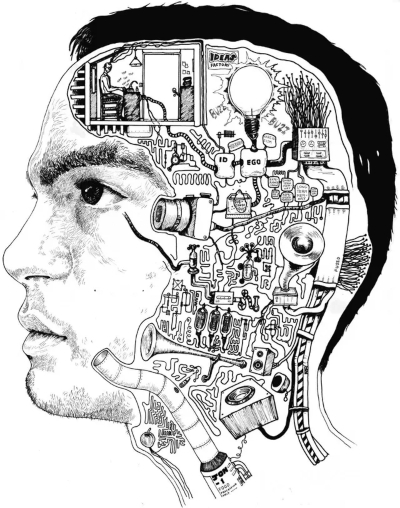

One area where this is the case is with Search text ad creative. A machine is going to be better able to take data about text ad performance and decide what ads, headlines, and descriptions are the best options for each searcher. They can do this because they can process numerous factors that humans don’t have the capability and time to process.

On the flip side, the machines still can’t come up with the inputs themselves very well. This is where we humans still need to shine.

A new way to test ads

Machines don’t know the target audience and can’t craft the messaging that’s going to resonate and connect with them. They’re good at making decisions about what already exists. But, they’re not good at coming up with something entirely new.

Because of this, ad testing has taken on a whole new reality. Instead of running ads side-by-side and splitting traffic between them, we give machines inputs and watch how they adjust to those inputs over time given their performance.

Instead of pulling insights from head-to-head data comparisons, we pull them from data trends that are crafted by what the machine decides to do.

The reason we don’t use head-to-head comparisons is when we do this, we fall into the trap Martin Roettgerding points out in his blog post series about debunking ad testing…

We tend to give 100% of the credit for an ad’s performance to the ad text. We think that the ad itself determines its CTR. In reality though, the contents of an ad are just one of many aspects that influence CTR.

Some other elements that come into play include…

-

The auctions each ad wins

-

The network they’re shown on

-

The ad slot they’re shown in

-

The position on the page they’re shown in

-

The device they’re shown on

In fact, there are so many variables in play that it’s not fair to point at ad text differences as the definite reason why A/B ad tests produce winners and losers.

Look for how machines use your inputs

Therefore, the way we need to look at this is not by making judgments of an ad based on its numerical performance compared to other ads. Instead, we make judgments based on how much the machine is using our different inputs. If it’s using an input a majority of the time, it means that input is the best option a majority of the time compared to the others. If it never uses an input, it means that input is never the best option.

If an input isn’t used all that often, it does not mean it’s useless and that they should be deleted (unless they never get used). This was the conclusion we used to come to. We used to just delete the A/B test loser because we didn’t have a machine that could choose which ad was best to serve in each and every auction.

Since we have these systems now, we no longer focus on A/B testing ads. We focus on the quality of the inputs we’re giving the machines to test the ads. The machines can’t create quality (yet at least). They can only analyze the metrics associated with it. This is how humans and machines can work together to get the best results possible.

Give the machine quality inputs

Coming up with some inputs isn’t all that hard. But remember I said that our goal isn’t just having inputs to fill space. If you give a machine crappy inputs, it will still output crappy performance. It’s like creating a really great recording of a really bad singer. It’s still going to be hard on the ears.

Our goal should be to create QUALITY inputs for the machine to work with.

But coming up with quality ads takes work. Then, if you want to come up with a large variety of quality ads, now we’re talking a pretty good investment of time and energy. After all, the characters are limited and there’s only so much you can say about some products and services, right? It’s easy to run out of ideas.

So what do we do?

A trick to produce new ideas

Well, I have some different tricks I’ve learned over the years. But the one I’m going to share with you now I’d say is the main way I’m able to produce so many new ideas…

I simply pull them from the search queries people perform that get matched to the ads in the ad groups I’m wanting to add new variations to.

But then on March 17th of this year, I honed in on this specific ad group to see if I could improve our results even further by adding new ad copy that may be more relevant to more queries we were entering auctions for. Over the coming weeks, I would take an ad group with 3 ads and add an additional 6 to the mix.

Diving into my search query reports, I found people performing searches like…

-

keto diet recipes for dinner

-

keto diet tasty recipes

-

best keto recipes ever

To me, these longer queries are great for giving more detail about what’s going on inside of people’s heads when they search, whether or not they include it in the query they perform.

These queries gave me great new ideas for headlines and descriptions to try as new inputs.

The results

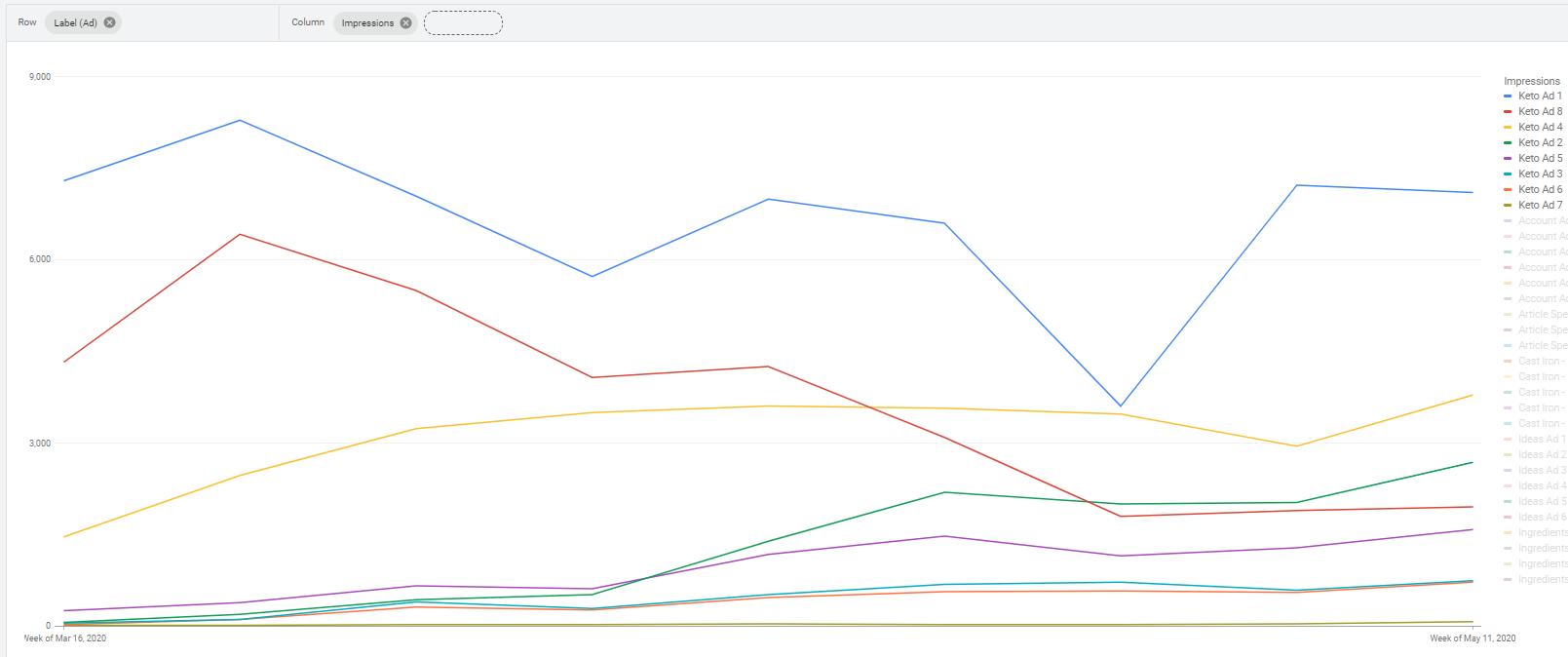

To see what happened, let’s take a look at how Impressions changed week to week throughout the time period. As you can see below, from the day I started the new ads to 9 weeks later, a lot changed.

While the ad that was garnering the top number of Impressions was still doing so at the end of the 9 weeks, many of the other ads changed place over time as the Google Ads machine learning adjusted to the data it was seeing.

The ad that was getting the 2nd most Impressions was now 4th. The ad getting the 3rd most Impressions was now 2nd. An ad getting almost no Impressions in the first week got the 3rd most Impressions in the last week.

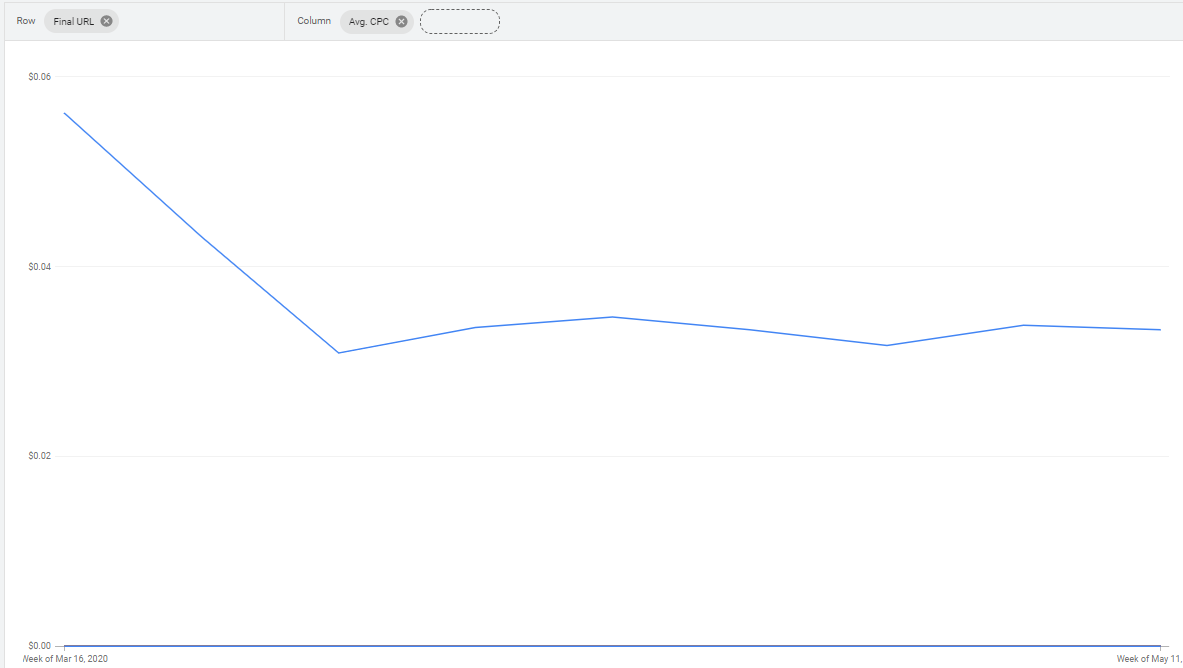

But we don’t really care which ad gets Impressions. We care about the metrics that matter. What was already a low CPC at $.05 became even lower.

Within 2 weeks of starting to add the new inputs, the Avg. CPC was $.03, for a -40% improvement! Wow, I thought things might improve a bit, but that was a bit of a shocker to me.

As time goes on and you continue to add new variations to ad groups, you’ll notice some variations won’t be getting enough Impressions to justify their sticking around, at which point you can feel free to pause them to keep your account uncluttered.

But, as time goes on and you put in the effort to give the machine quality inputs to work with, you’ll learn about what best attracts searchers to come to your site and will improve your Quality Score and, in turn, your CPCs.